An Occam’s Razor for Very-Hot Hot Jupiters

Editor’s note: Astrobites is a graduate-student-run organization that digests astrophysical literature for undergraduate students. As part of the partnership between the AAS and astrobites, we occasionally repost astrobites content here at AAS Nova. We hope you enjoy this post from astrobites; the original can be viewed at astrobites.org!

Title: H− Opacity and Water Dissociation in the Dayside Atmosphere of the Very Hot Gas Giant WASP-18 b

Author: Jacob Arcangeli, Jean-Michel Desert, Michael R. Line, et al.

First Author’s Institution: University of Amsterdam, the Netherlands

Status: Accepted to ApJ

Disclaimer: Vatsal Panwar works in the same department as the lead author, but he did not have any scientific involvement in this project.

Hot Jupiters — one of the first types of exoplanets to be detected — have continued to challenge our understanding of planetary systems since their discovery. Their relative size, mass, and proximity to the host star make them the easiest exoplanets for detection and atmospheric characterization, especially from ground-based instruments; it’s no wonder that recent wide-angle surveys from the ground (WASP, KELT, and MASCARA, to name a few) have been quite successful in finding these gas giants.

Artist’s illustration of WASP-18b. Insets show the optical and X-ray views of the system. [X-ray: NASA/CXC/SAO/I.Pillitteri et al; Optical: DSS]

Recent studies of some very-hot hot Jupiters suggest the presence of thermal inversion in their atmospheres, akin to what happens in the Earth’s stratosphere due to the presence of ozone. In the case of hot Jupiters, energy from stellar irradiation is absorbed by gas-phase TiO and VO. While thermal inversion is not totally unexpected in these atmospheres, the anomalously high suggested values of metallicity and C/O ratio (frequently used indicators of chemistry and abundances in exoplanet atmospheres) indicate that there is more to the atmospheres of very hot Jupiters than meets the eye. Today’s paper tries to resolve this issue by taking a cue from the conditions in stellar photospheres with effective temperatures similar to those of very hot gas-giant exoplanets.

Who’s Drinking All the Water?

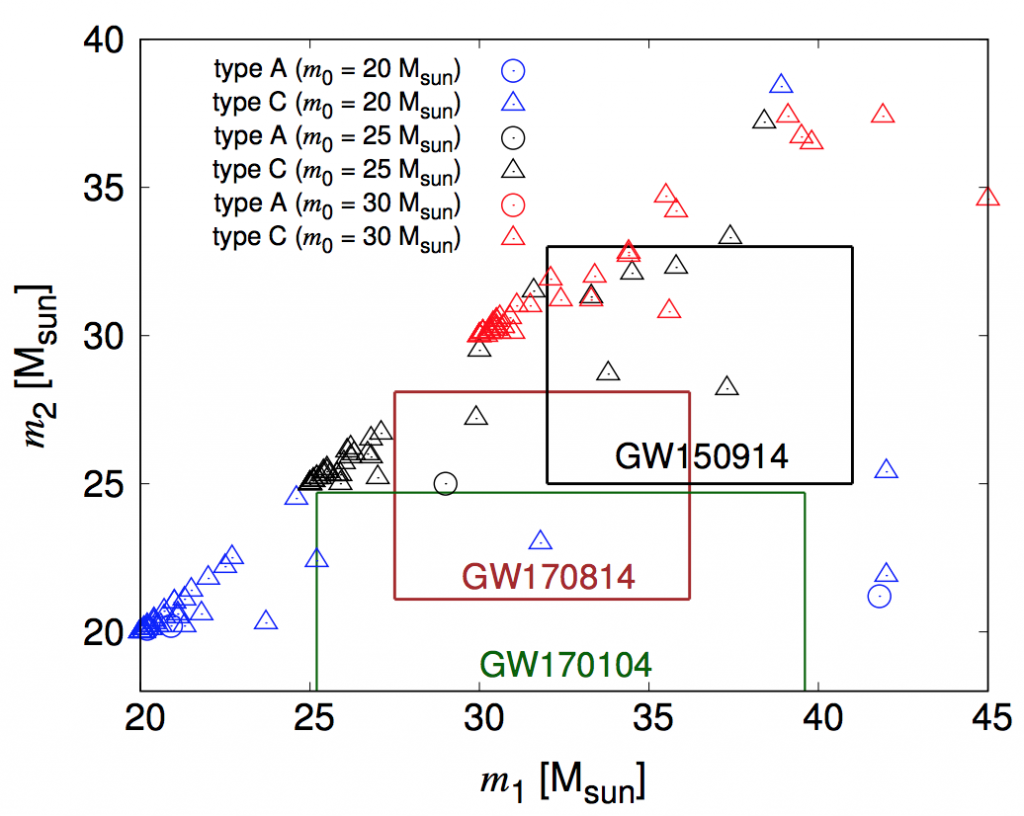

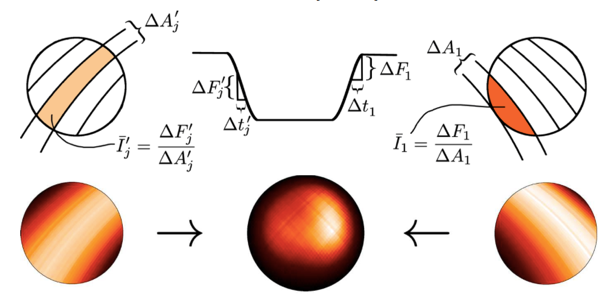

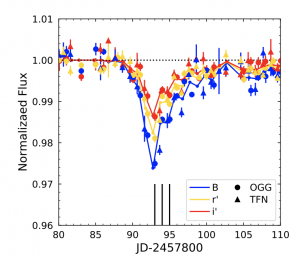

Water is one of the most prominent sources of opacity taken into account by the theoretical models of hot Jupiters’ emission spectra in the wavelength range probed by today’s paper (see Figure 1). Despite the low resolution in Spitzer/IRAC bands, there is good indication of the presence of some spectral features around 4.5 μm. An emission feature in a band where the atmosphere is optically thick (due to the presence of opacity sources — including, in this case, water — absorbing relatively more in that band) can typically be explained by the presence of a thermal inversion. The absence of corresponding water features expected around 1.4 μm doesn’t quite fit this picture, though. A high C/O ratio could be invoked in this case, as that would drive the chemistry of the atmosphere to deplete water and its features, while still allowing an inverted atmospheric profile. However, the authors suggest that water at the high-temperature and low-pressure conditions of very hot Jupiters should instead undergo thermal dissociation. For comparison, stellar photospheres with similar effective temperatures have higher pressures (due to higher surface gravities) that prevents water from thermal dissociation and makes it show up in their emission spectrum.

Another key factor used by the authors to explain the absence of water features is the presence of H− ions whose opacities become important in the temperature range of 2500–8000 K. The effect of H− opacities has been included in atmospheric models for brown dwarfs and hot Jupiters in past, but it has not been considered for retrieving the properties of very hot gas giants. Generation of H− ions in the dayside atmospheres of very-hot hot Jupiters can occur due to thermal dissociation of molecular hydrogen and the presence of ample free electrons from metal ionization at high temperatures.

Figure 1: The emission spectrum of WASP-18b (shown by the black points) is obtained by observing the secondary eclipses (before and after the planet is going behind the star in the line of sight). This allows us to measure the flux emitted by the day side of the planet (shown on the y-axis). The excess in flux around 4.5 μm indicates the presence of emission features. However, the spectrum is featureless in the band probed by HST/WFC3 instrument, which is explained as the combined effect of H− opacity and water depletion due to thermal dissociation. This can be seen from the opacity cross sections of H− and water (shown by red and blue curves, values on right-hand y-axis) around the HST/WFC3 bandpass. [Arcangeli et al. 2018]

Inversion Could Indeed Be a Trend

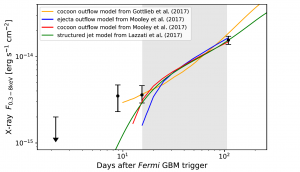

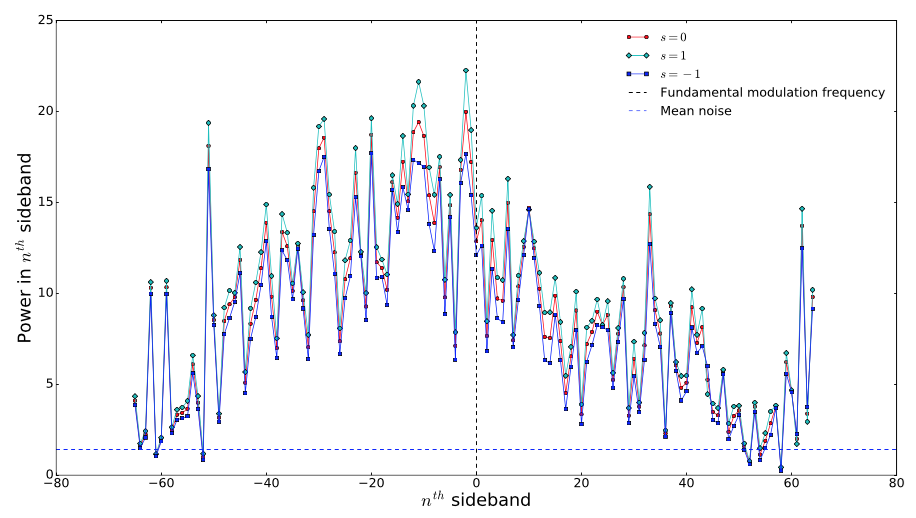

After inclusion of both thermal dissociation of water and H− opacity contribution in the theoretical models, the retrieved values for metallicity and C/O ratio for the atmosphere of WASP-18b drop to solar values. The best fit temperature structure, in this case, is also inverted due to the presence of high-altitude absorbers like TiO and VO. However, their features in the emission spectrum are damped by competing absorption due to H− ions (see Figure 1). This is in contrast to the earlier retrieved results for WASP-18b that suggested super-solar values for its metallicity and ℅ ratio — but these new results may be more plausible given the expected formation history for planets in this mass range (see Figure 2).

Figure 2: A comparison of metallicities of planets with respect to their host stars. Massive gas giants like WASP-18b are not expected to follow the same trend as that for less massive planets; their metallicities should instead closely resemble those of their host stars. This is reconfirmed from the observations in this paper. [Arcangeli et al. 2018]

About the author, Vatsal Panwar:

I am a PhD student at the Anton Pannekoek Institute for Astronomy, University of Amsterdam. I work on characterization of exoplanet atmospheres in order to understand more about the diversity and origins of planetary systems. I also enjoy yoga, exploring world cinema, and pushing my culinary boundaries every weekend.