Counterparts to Gravitational Wave Events: Very Important Needles in a Very Large Haystack

Editor’s note: Astrobites is a graduate-student-run organization that digests astrophysical literature for undergraduate students. As part of the partnership between the AAS and astrobites, we repost astrobites content here at AAS Nova once a week. We hope you enjoy this post from astrobites; the original can be viewed at astrobites.org!

Title: Where and when: optimal scheduling of the electromagnetic follow-up of gravitational-wave events based on counterpart lightcurve models

Authors: Om Sharan Salafia et al.

First Author’s Institution: University of Milano-Bicocca, Italy; INAF Brera Astronomical Observatory, Italy; INFN Sezione di Milano-Bicocca, Italy

Status: Submitted to ApJ, open access

The LIGO Scientific Collaboration’s historic direct detection of gravitational waves (GWs) brought with it the promise of answers to long-standing astrophysical puzzles that were unsolvable with traditional electromagnetic (EM) observations. In previous astrobites, we’ve mentioned that an observational approach that involves both the EM and GW windows into the universe can help shed light on mysteries such as the neutron star (NS) equation of state, and can serve as a unique test of general relativity. Today’s paper highlights the biggest hinderance to EM follow-up of GW events: the detection process doesn’t localize the black hole (BH) and NS mergers well enough to inform a targeted observing campaign with radio, optical, and higher-frequency observatories. While EM counterparts to GW-producing mergers are a needle that’s likely worth searching an entire haystack for, the reality is that telescope time is precious, and everyone needs a chance to use these instruments for widely varying scientific endeavors.

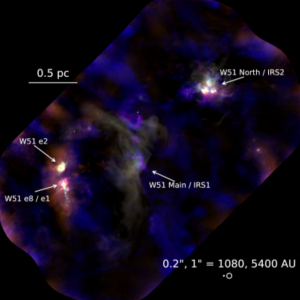

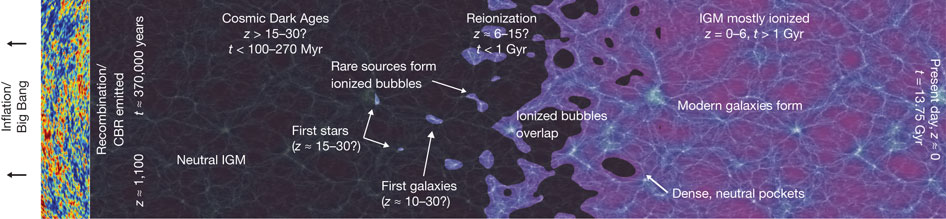

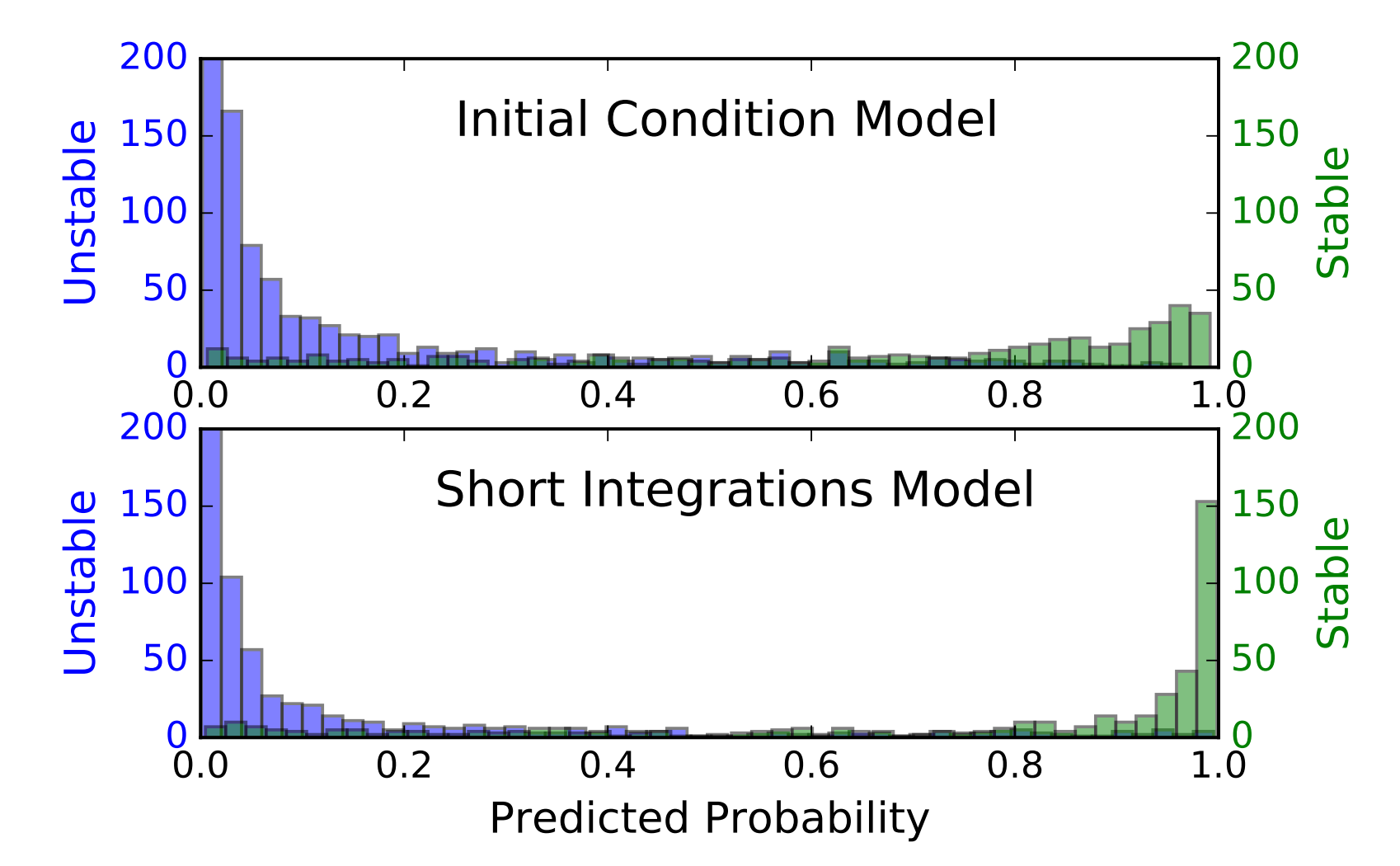

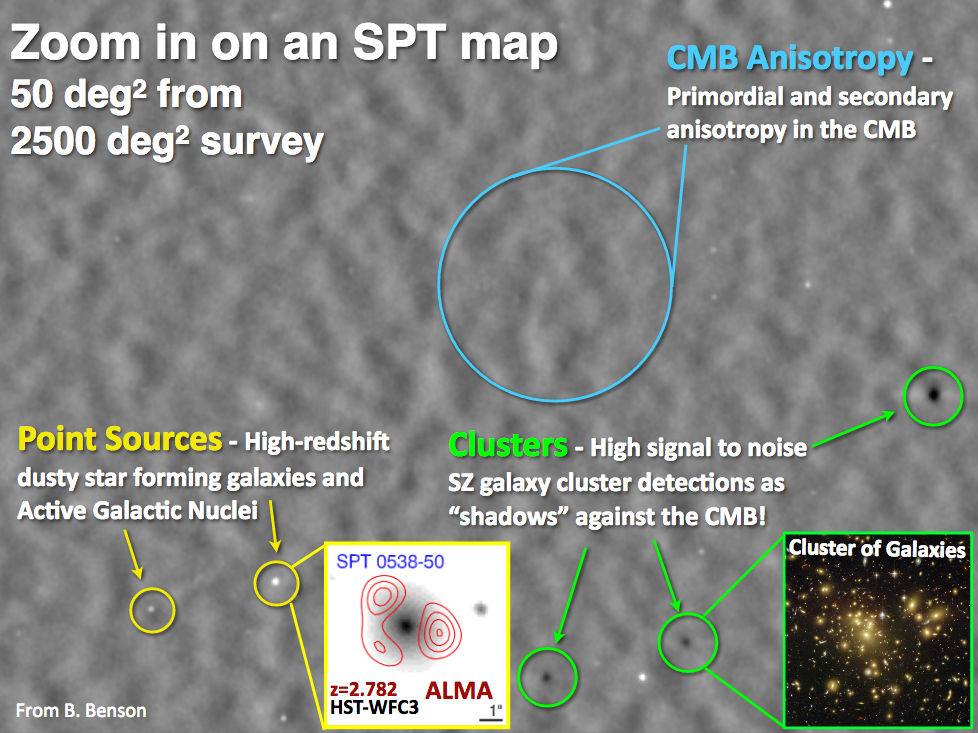

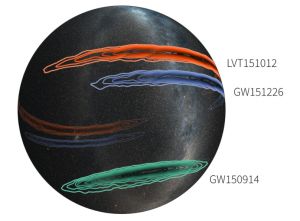

The first GW detection by LIGO, GW150914, was followed up by many observatories that agreed ahead of time to look for EM counterparts to LIGO triggers. The authors of this study propose to improve upon the near-aimless searches in swaths of hundreds of degrees that have been necessary following the first few GW candidate events (see Figure 1). Luckily, there are two key pieces of information we have a priori (in advance): information about the source of the GW signal that can be pulled out of the LIGO data, and an understanding of the EM signal that will be emitted during significant GW-producing events.

Figure 1: Simplified skymaps for the two likely and one candidate (LVT151012) GW detections as 3D projections onto the Milky Way. The largest contours are 90% confidence intervals, while the innermost are 10% contours. From the LIGO Scientific Collaboration.

What Are We Even Looking For?

Mergers that produce strong GW signals include BH–BH, BH–NS, and NS–NS binary inspirals. GW150914 was a BH–BH merger, which is less likely to produce a strong EM counterpart due to a lack of circumbinary material. The authors of this work therefore focus on the two most likely signals following a BH–NS or NS–NS merger. The first is a short gamma-ray burst (sGRB), which would produce an immediate (“prompt”) gamma-ray signal and a longer-lived “afterglow” in a large range of frequencies. Due to relativistic beaming, it’s rare that prompt sGRB emission is detected, as jets must be pointing in our direction to be seen. GRB afterglows are more easily caught, however. The second is “macronova” emission from material ejected during the merger, which contains heavy nuclei that decay and produce a signal in the optical and infrared shortly after coalescence. One advantage to macronova events is that they’re thought to be isotropic (observable in all directions), so they’ll be more easily detected than the beamed, single-direction sGRBs.

(Efficiently) Searching Through the Haystack

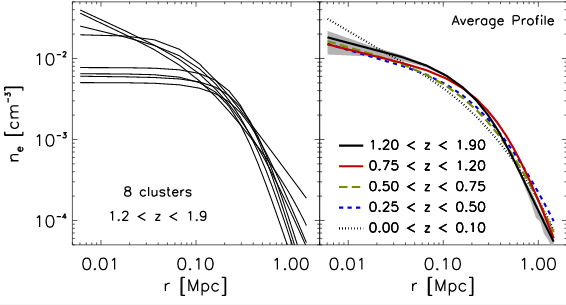

LIGO’s direct GW detection method yields a map showing the probability of the merger’s location on the sky (more technically, the posterior probability density for sky position, or “skymap”). The uncertainty in source position is partly so large because many parameters gleaned from the received GW signal, like distance, inclination, and merger mass, are degenerate. In other words, many different combinations of various parameters can produce the same received signal.

An important dimension that’s missing from the LIGO skymap is time. No information can be provided about the most intelligent time to start looking for the EM counterpart after receiving the GW signal unless the search is informed by information about the progenitor system. In order to produce a so-called “detectability map” showing not only where the merger is possibly located but also when we’re most likely to observe the resulting EM signal at a given frequency, the authors follow an (albeit simplified) procedure to inform their searches.

The first available pieces of information are the probability that the EM event, at some frequency, will be detectable by a certain telescope, and the time evolution of the signal strength. This information is available a priori given a model of the sGRB or macronova. Then, LIGO will detect a GW signal, from which information about the binary inspiral will arise. These parameters are combined with the aforementioned progenitor information to create a map that helps inform not only where the source will most likely be, but also when various observatories should look during the EM follow-up period. Such event-based, time-dependent detection maps will be created after each GW event, allowing for a much more responsive search for EM counterparts.

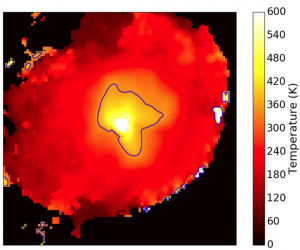

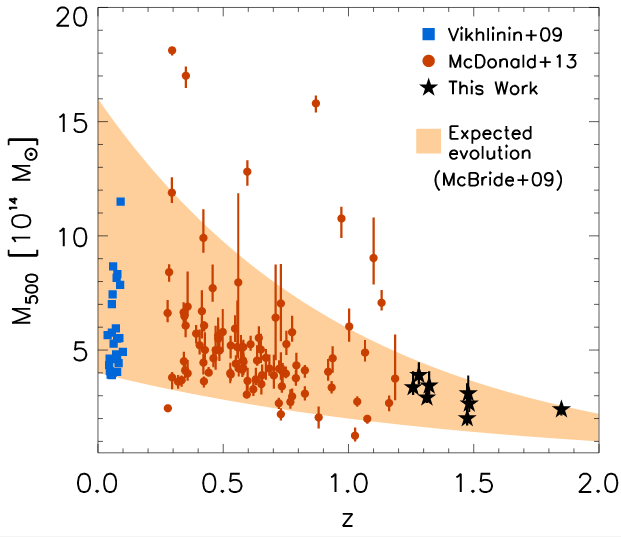

Figure 2: The suggested radio telescope campaign for injection 28840, the LIGO signal used to exemplify a more refined observing strategy. Instead of blindly searching this entire swath of sky, observations are prioritized by signal detectability as a function of time (see color gradient for the scheduled observation times). Figure 8 in the paper.

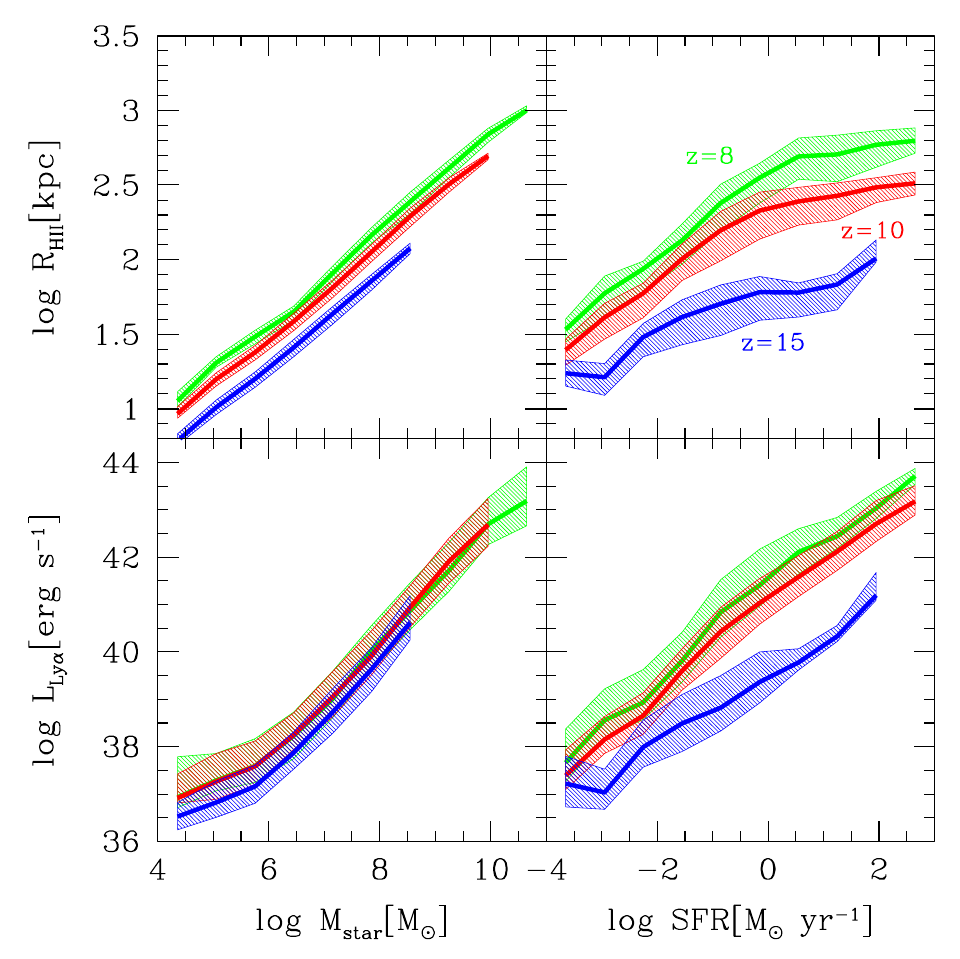

Using these detectability maps to schedule follow-up observations with various telescopes (and therefore at different frequencies) is complicated to say the least. The authors present a potential strategy for follow-up using a real LIGO injection (a fake signal fed into data to test their detection pipelines) of a NS–NS merger with an associated afterglow. Detectability maps are constructed and observing strategies are presented for an optical, radio, and infrared follow-up search (see Figure 2 as an example). Optimizing the search for an EM counterpart greatly increased the efficiency of follow-up searches for the chosen injection event; for example, the example radio search would have found the progenitor in 4.7 hours, whereas an unprioritized search could have taken up to 47 hours.

Conclusions

The process of refining an efficient method for EM follow-up is distressingly complicated. Myriad unknowns, like EM signal strength, LIGO instrumental noise, observatory availability, and progenitor visibility on the sky all present a strategic puzzle that needs to be solved in the new era of multimessenger astronomy. This work proves that improvements in efficiency are readily available, and that follow-up searches for EM counterparts to GW events will likely be more fruitful as the process is refined.

About the author, Thankful Cromartie:

I am a graduate student at the University of Virginia and completed my B.S. in Physics at UNC-Chapel Hill. As a member of the NANOGrav collaboration, my research focuses on millisecond pulsars and how we can use them as precise tools for detecting nanohertz-frequency gravitational waves. Additionally, I use the world’s largest radio telescopes to search for new millisecond pulsars. Outside of research, I enjoy video games, exploring the mountains, traveling to music festivals, and yoga.