Living a (Solar System) Lifetime in Color

Editor’s note: Astrobites is a graduate-student-run organization that digests astrophysical literature for undergraduate students. As part of the partnership between the AAS and astrobites, we occasionally repost astrobites content here at AAS Nova. We hope you enjoy this post from astrobites; the original can be viewed at astrobites.org.

Title: Col-OSSOS: Color and Inclination are Correlated Throughout the Kuiper Belt

Author: Michaël Marsset, Wesley C. Fraser, et al.

First Author’s Institution: Queen’s University Belfast, UK

Status: Accepted to AJ

The outer reaches of our own solar system remain a mystery. Astronomers are only just beginning to shine (colored) light on the distant region of our solar system called the Kuiper Belt. But this region has a lot to tell us about the history of the solar system — N-body simulations predict the types of objects we should find and what their orbits should look like. Are they locked into an orbital resonance with Neptune? Have they been flung out of the plane of the solar system by a past interaction with another object? Other studies also predict the types of molecules we should see based on where the objects formed and how they have been flung around the solar system.

From Grayscale to Color

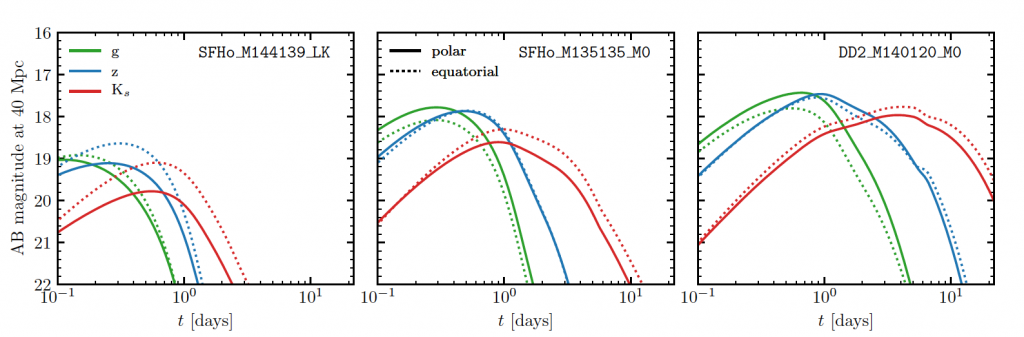

Figure 1. Demonstration of spectroscopy vs. broad-band filter imaging. The spectrum (of a galaxy, for this plot) is shown in black, while the filter acceptance by wavelength for the BViz filters are shown in color. The flux from all wavelengths spanned by a filter is added according to that filter’s response to produce the data points in the top panel. [STSci and Dan Coe]

Today’s authors were specifically interested in how an object’s color correlates with its orbital inclination. Since color and orbital properties like inclination can tell us about an object’s dynamical history, considering both together could potentially place stronger constraints on our solar system’s complicated dynamical past than either alone. The authors selected their sample from Col-OSSOS itself and from previous surveys according to a few criteria:

- Previous surveys considered must have published their telescope pointing history and taken observations in filters comparable to the Col-OSSOS filters.

- Objects must have an orbital inclination greater than 5º, such that they are “dynamically excited.”

- Objects must be smaller than ~440 km, to avoid the range of sizes in which KBOs transition from large and ice-rich to small and depleted of ices. The authors were only interested in colors due to rock composition, not in colors due to the presence of ices.

- Objects must not belong to known families with distinct compositions/colors (like the icy collisional family of Haumea) or pass too closely to Jupiter.

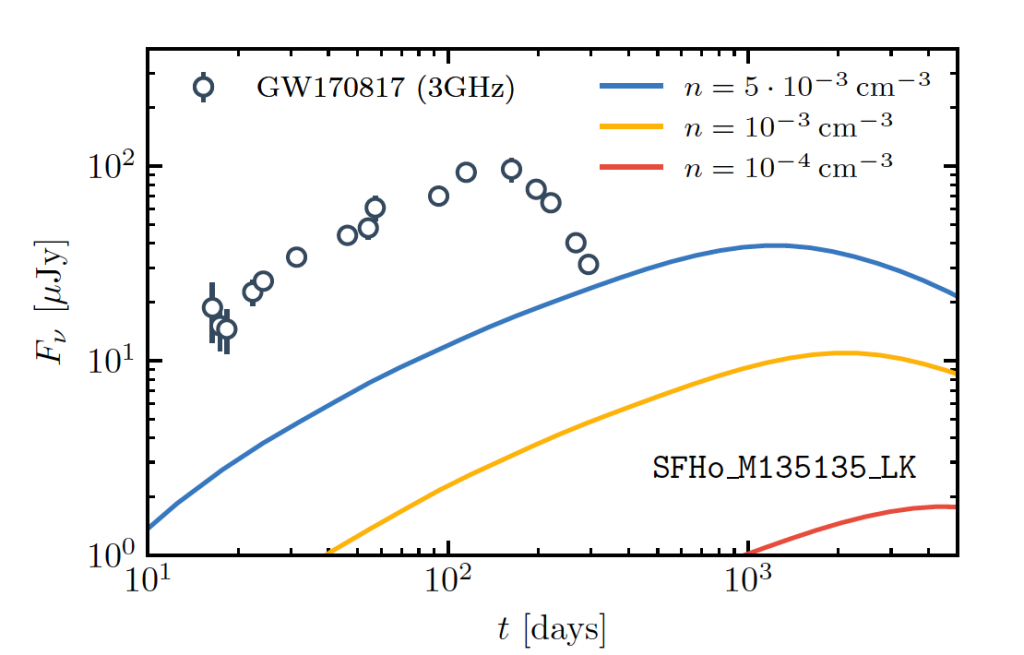

When all was said and done, the authors had a sample of 229 KBOs whose colors fell into two distinct populations that the authors termed “gray” and “red.” They then examined the orbital inclination distributions of the gray and red KBOs in turn.

Colorful Results

Figure 2. The orbital inclination vs. spectral slope for the 229 gray and red KBOs, where the spectral slope is a measure of how an object’s reflectance changes with wavelength — in other words, it is another measure of color. The blue shading represents the smoothed density of data points. The red KBOs have a significantly lower inclination distribution in general than the gray KBOs. [Marsset et a. 2019]

But what if the observed trend between color and orbital inclination in the gray and red KBO populations is simply due to biases in the surveys? Previous studies have shown that redder objects tend to be more reflective, making them brighter and more readily detected. The authors also note that few surveys target higher inclinations and that those surveys tend to use redder filters. They use both analytical calculations and a survey simulation code to estimate the effects of these factors. They find that the potential biasing factors would actually result in more red KBOs detected with high orbital inclinations — exactly opposite of the trend they find in the data! Furthermore, their survey simulations show that they find many fewer red objects than their models predict. This implies that the color-inclination trend observed is an intrinsic feature rather than one produced by survey bias.

So why does this trend between color and inclination exist? Prior to today’s paper, two hypotheses competed for the top spot: (1) all KBOs were originally similar, but collisions and other resurfacing processes altered both their colors and inclinations, and (2) the two color populations originally formed in different locations in the protoplanetary disk from different materials, and the gray KBOs were flung outward into the Kuiper Belt. Since collisions affect both orbital inclination and eccentricity, the authors would expect a color-eccentricity trend as well if hypothesis (1) were correct. However, no such trend exists in the data, suggesting that the two color populations did, in fact, originally form in different regions of our solar system. The results from today’s paper are suggestive of hypothesis (2), yet 229 KBOs is only a tiny fraction of all the KBOs waiting to be discovered and studied. Col-OSSOS is still taking data, the Large Synoptic Survey Telescope (LSST), which will turn on in 2023, is expected to detect ~40,000 KBOs with well-measured colors. And that will still only be a fraction of the predicted number of KBOs (possibly more than 100,000 larger than 100 km, and even more smaller than that). There is still a lot of solar system to explore!

About the author, Stephanie Hamilton:

Stephanie is a physics graduate student and NSF graduate fellow at the University of Michigan. For her research, she studies the orbits of the small bodies beyond Neptune in order learn more about the Solar System’s formation and evolution. As an additional perk, she gets to discover many more of these small bodies using a fancy new camera developed by the Dark Energy Survey Collaboration. When she gets a spare minute in the midst of hectic grad school life, she likes to read sci-fi books, binge TV shows, write about her travels or new science results, or force her cat to cuddle with her.