Editor’s Note: This week we’re at the 233rd AAS Meeting in Seattle, WA. Along with a team of authors from Astrobites, we will be writing updates on selected events at the meeting and posting each day. Follow along here or at astrobites.com. The usual posting schedule for AAS Nova will resume next week.

Plenary Talk: The Era of Surveys and the Fifth Paradigm of Science (by Mia de los Reyes)

“Science is changing,” says Alexander Szalay (Johns Hopkins University). Szalay began the first talk of the final day of #AAS233 by describing how the basic paradigm of science has shifted over time. He explained that science was for many centuries empirical, driven by observations of the natural world; as scientists began to explain these observations with underlying physics, it became predominantly theoretical. In recent decades, with the invention of computers, science became computational — and today, the growing dominance of “big data” is shifting science to a data-intensive paradigm.

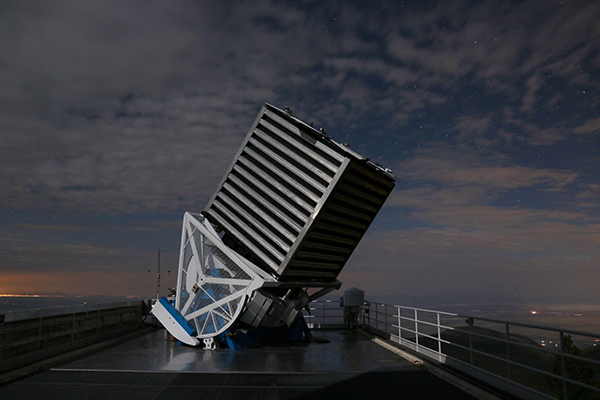

Szalay then described the scientific landscape in this data-intensive world: data is everywhere, and it grows as fast as our computing power! This is primarily thanks to the rise of large surveys over the last 20 years. Szalay himself got involved with the data-driven side of astronomy through one such survey: the Sloan Digital Sky Survey (SDSS), an ambitious project that aimed to measure spectra of millions of objects across the whole sky (check out our coverage of the most recent SDSS press release here). In particular, Szalay helped build the Skyserver database at Johns Hopkins University. He describes Skyserver, the portal to access SDSS’s database, as a “prototype in 21st century data access” — it has become the world’s most used astronomy facility today, with over 1.2 billion web hits in the last twelve years!

The SDSS telescope at night [P. Gaulme]

On the other hand, Szalay then pointed out, SDSS has also revealed some of the major problems with big data. One such issue is lifecycles. Data and data services have finite lifetimes, as data standards, usage patterns, browsers, and software platforms all change. This opens up questions about the cost of preserving valuable data over the long term. Furthermore, we need to consider how to publish big datasets. The old publishing model of including data in printed publications clearly isn’t working, and we’re now moving to open data and open publishing (much in the same way that music publishing has largely transitioned from individual LP sales to distribution applications like Pandora and Spotify).

Szalay then asked, “How long can this proliferation of data go on?” Everyone wants more data, but “big data” also means more “dirty data” with more systematic errors. Szalay believes that we don’t simply need more data — we need to collect data that are more relevant. How do we do that? Perhaps this is the fifth paradigm of science, when algorithms make the decisions not only in data analysis, but also in data collection! For example, machine-learning algorithms could use feedback from observed targets to choose their next targets. Finally, Szalay pointed out that to make the transition to this fifth paradigm, we need to change the way we train scientists: the next generation of scientists should have deep expertise not just in astronomy, but also in data science. So if you’re a young scientist, now might be a good time to start thinking about data!

Press Conference: Exoplanets and Life Beyond Earth (by Vatsal Panwar)

The last press conference on exoplanets was kicked off by AAS Press Officer Rick Feinberg, who remarked on the high share of exoplanet press conferences at this meeting.

Direct imaging of Kappa And b [J. Carson]

Next up was a former Astrobiter! Benjamin Montet (University of Chicago) spoke about attempts to robustly detect transiting exoplanets in star clusters using the Kepler Space Telescope, the gift that keeps on giving. He noted that despite extensive searches for planets in the the data gathered by Kepler over ten years, there may still be some planets lurking in the data that we may have missed. The initial approach of extracting raw light curves for the Kepler dataset involved assigning a unique set of pixels to a star and performing conventional aperture photometry. However, this approach becomes less reliable if a star is in a crowded field — like in a star cluster. Finding planets in star clusters is useful as their ages can be pegged to the age of the cluster (which is relatively easy to determine). Properties of planets in clusters can hence help in understanding the long-term evolution of planetary systems. In this context Montet has been looking at the cluster NGC 6791, which was observed by Kepler for four years. By using Gaia astrometry of this region to determine accurate positions of stars in this cluster as observed by Kepler, his group has detected a number of planets in the cluster. Lack of short-period planets in this sample could be a hint of destruction of hot Jupiters due to tidal inspiral. This search also revealed 8 new eclipsing binaries and a few cataclysmic variable stars in the cluster. To conclude, Montet stressed that methods developed in this study will be relevant for looking at planets in star clusters observed by TESS.

Kate Su (University of Arizona) then talked about the studies of giant impact in the context of terrestrial planet formation. The usual pathway of terrestrial planet formation begins with the accumulation of pebbles, leading to the formation of planetesimals, which are the embryos of solid planet cores. However, this last step is also accompanied by the possibility of giant impacts, which might be a contributing factor to the diversity of compositions of the interiors of rocky exoplanets. A giant impact event of a large enough magnitude can trigger a steep brightening of the object in infrared wavelengths, which could be an observational signature of dust produced after collision. This is what’s believed to have happened in the star NGC 2457-ID8 in 2012 and again in 2014. The Spitzer Space Telescope observed a brightening of this disk in infrared back in 2012, which shows there was a spike in the amount of dust at that time. Dynamical and collisional simulations of this event have concluded that it would have happened around 0.43 AU to the star, and that the size of impactor must have been at least 100 km. Another impact in 2014 was observed by Spitzer in later stages of the survey, and this was even closer to the star with a 0.24 AU inferred separation.

Barnard’s star [Backyard Astronomer; P. Mortfield & S. Cancelli]

Plenary Talk: From Disks to Planets: Observing Planet Formation in Disks Around Young Stars (by Caitlin Doughty)

In this plenary talk, Catherine Espaillat (Boston University) discussed the state of the study of protoplanetary disks and what they tell us about planet formation. Planets form in protoplanetary disks around young stars, and the detected planets are diverse in size, composition, and their distances from host stars. However, both the planets and their formation processes are difficult to observe. Many astronomers pursue direct imaging of these protoplanetary disks, but disks can be easily outshined by bright host stars. This has fueled the need for exoplanetary scientists to cultivate a collection of indirect tracers of the presence of protoplanetary disks, especially in the early days of the field.

Espaillat began by presenting a brief history of the early study of protoplanetary disks, as well as what we currently know about these disks and planet formation. In the 1980s, when the spectral energy distributions of stars were studied, astronomers observed an excess in the anticipated infrared emission. Originally interpreted as possible activity from the chromosphere of the star, these were later explained by the presence of heated dust grains. By the 1990s, the Hubble Space Telescope had confirmed presence of dusty disks around stars. Magnificent edge-on images of disks were directly observed soon after. Later, the Spitzer Space Telescope and the Atacama Large Millimeter Array discovered gaps in several of these disks, and this culminated with a beautiful image of many small gaps HL Tauri.

Gaps in the disk of HL Tauri [ALMA]

Next, Espaillat discussed some of the so-called “footprints” of planet formation within protoplanetary disks. Since gaps within disks are believed to be created by planet formation, studies focus on the sizes and locations of gaps. Millimeter observations have shown a multitude of disks with gaps greater than 10 AU, but there is a diverse arrangement of disk structures, showing gaps of many sizes at many distances from the host. Some show bright spots or spirals, but they are typically quite symmetric. The small dust grain distribution in images reveals the flaring at the edges of disks and ripples in their surface structure, highlighting the complexity of their morphologies. Regarding planet formation, it is theorized that planets form most easily at snowlines within the disk, because >1-mm dust grains falling towards the host star may encounter the line and stop their fall, gradually coagulating onto one another to form planetesimals. However, ALMA observations have searched for gaps and planets at snowlines and have yet to turn up any evidence to confirm the theory.

Espaillat at #AAS233: Lots to learn from small grains too — including ripples and shadows on the surface of the disk pic.twitter.com/g0Bf3F1O3r

— astrobites (@astrobites) January 10, 2019

If there is relative consensus about indicators of planet formation, do we have any idea when they are forming? A logical consideration can provide an intuitive constraint: Planet formation has to occur before the dust disk has dissipated. It is observed that disk frequency around stars falls off with age, with few disks seen around stars older than 10 million years, and some stars even lose their disks at much younger ages. Dust evolution occurs in stars that are estimated to be only 1 million years old (quite young for a star), where indications of dust depletion are seen in the upper disk layers, one of the crucial first steps in planet formation. One interesting discovery is that HL Tauri is still being fueled by the molecular cloud it formed from; if this is a common occurrence, it could extend the potential disk-forming lifetime of stars. This remains an open question.

Espaillat ended the talk by emphasizing a few of the open questions about protoplanetary disks. As an example, how does gas accrete onto the star? Astronomers think that material from the disk “jumps” over the gap between the disk and the star and, tracing the magnetic field lines, is swept by the star’s gravity towards the poles. The amount of material affects the brightness of the accretion signal, meaning that we can use it to measure the accretion rate. It is expected that the rate should be affected by the size of the gap between the disk and the star, but scientists consistently find rates that are similar to one another. It is also seen that accretion rates can be variable with time, with system GM Auriga having shown an increase in measured accretion rate by a factor of four in a single week! The reason is unknown, but future observations are planned that will hopefully illuminate the cause. Future observations, once enough sensitivity is obtained, should also reveal the formation of moons around exoplanets! Espaillat ended by noting that next-generation telescopes like the Thirty Meter Telescope will help to resolve innermost disk structure, down to 1 AU, to help visualize what is happening there in these mysterious and dynamic objects.

Press Conference: Astronomers Have a Cow (by Mike Zevin)

In the 8th and final press conference of AAS 233, astronomers presented recent observations of everyone’s favorite farmyard animal in astronomy — AT2018cow, known by the astronomy community as simply “The Cow” (though “cow” was actually just a coincidental label assigned to this event). This powerful electromagnetic transient was discovered by the ATLAS telescope on 16 June 2018, residing in a star-forming galaxy approximately 200 million lightyears away.

Dan Perley (Liverpool John Moores University) kicked things off by summarizing the discovery — a new type of transient that was about 10 times more luminous than a typical supernova, or about 100 billion times more luminous than the Sun. The Cow was odd because of how “blue” it was, its speedy rise time (reaching its peak luminosity in only about 2 days), and its high-velocity ejecta (which reached speeds of about one tenth the speed of light). Telescopes from all over the world across all wavelengths observed this unique transient. In particular, Perley worked with the GROWTH network — a network of small telescopes around the world that were able to provide a nearly continuous view of the transient; they could observe continuously since there were always telescopes somewhere in the network that weren’t hindered by the pesky Sun. Its slow decay over time indicated that something was keeping it hot for many weeks after the explosion — a “central engine” which continually powered the ejecta. Two classes of models have been proposed for this supernova: the tidal disruption of a star by an intermediate-mass black hole or a special type of supernova involving a jet breakout. Perley commented that this explosion was similar to Fast Blue Optical Transients observed by prior surveys.

Location of AT2018cow [SDSS]

Amy Lien (NASA Goddard Space Flight Center & Univ. of Maryland Baltimore County) then continued the barnyard bash by highlighting one particular interpretation of the explosion: that it was caused by the tidal disruption of a white dwarf by a black hole. Since the source wasn’t centrally located in its host galaxy, it is unlikely that a supermassive black hole, the standard culprit of tidal disruption events, was responsible. Instead, it would have had to be disrupted by an intermediate-mass black hole of about 1 million solar masses residing in a globular cluster. Press release

After this, Anna Ho (Caltech) presented a perspective from longer wavelengths — observations in the sub-millimeter (i.e., wavelengths around the size of a grain of sand). The Cow was the brightest millimeter transient ever observed, brighter than any previously-observed supernova. It was first observed by the Submillimeter Array (SMA) in Hawaii, and was the first time a transient was observed to brighten at millimeter wavelengths. These observations, as well as follow-up millimeter observations by the Atacama Large Millimeter Array (ALMA) found that the Cow released a large amount of energy into a dense environment, and that it is a prototype for a whole new population of explosions that are prime targets for millimeter observatories.

Closing out the press conference, Raffaella Margutti (Northwestern University) presented a panchromatic view of the Cow, ranging from radio to optical to X-ray radiation. The key conclusions were that the Cow produced a luminous, persistent X-ray source that shined through a dense circumstellar environment rich in hydrogen and helium. Radio observations found a significant peak in the spectra from iron. Furthermore, the evolution of the radio light curve indicates that the explosion occurred in a dense interstellar environment, not something that would be found in a globular cluster. These pieces of evidence support the idea that the transient was caused by the explosion of a massive star and birth of a black hole rather than the tidal disruption event of a white dwarf by an intermediate-mass black hole. One of the most exciting features was found in the X-rays — a bump in high-energy X-rays known as a Compton bump. This is totally new for observations of supernovae, but has been observed in systems with accreting black holes, and thus suggests that the Cow had a newly-formed and accreting black hole at its center. All the light in the electromagnetic spectrum gave astronomers a different piece of same puzzle, and these pieces have come together to build a picture of this cow of a discovery. Press release

Plenary Talk: From Data to Dialogue: Confronting the Challenge of Climate Change (by Stephanie Hamilton)

Ten years. That is how long it took to put a man on the Moon. It is also how long we have to address climate change. And if we don’t? Well, the consequences are pretty dire. Dr. Heidi Roop of the University of Washington delivered the penultimate plenary talk of the meeting about climate change and what astronomers can do to help combat it.

Dr. Roop began her plenary by likening astronomers to climate scientists. We both think about unthinkable scales — what does 19,000 light years really mean? Or how about 800,000 years of climate data? We are humans who operate on human scales, and it is nearly impossible for us to wrap our heads around these scales as scientists, let alone for members of the general public. How can we possibly be changing an entire planet? Astronomers and climate scientists also both deal with the unknown. For climate scientists, the uncertainty is humans. Climate change is definitely happening, but the unknown is when we will gather ourselves and how we will mitigate and adapt to the effects of climate change.

The magic number plastered over climate-change coverage in the news is 1.5ºC. But why? There is a world of difference between overall global warming of 1ºC vs 2ºC over pre-Industrial Revolution levels. At 2ºC, two billion more people are exposed to rising water levels. Coral reefs will die off. 76 million more people will be affected by drought per month. And much, much more. We are on track to top 1.5ºC well before 2050 if we don’t act now — but mitigation by itself is not enough. How we feel future climate depends both on the actions we take now to mitigate the effects of climate change and how we prepare for what we’ve already set in motion. How do we adapt to a reality of more frequent and intense forest fires, which will also mean worsening hazardous smoke events? How do we adapt our coastal water treatment plants so that they will survive rising water levels? What trees should we plant today and where? The trees that thrive today almost certainly will not be the ones that thrive in a century.

Why do we care about 1.5 C of warning? #AAS233 pic.twitter.com/RfS7fjBd8o

— Jacob White (@Jacob_White26) January 10, 2019

So what can we as astronomers do? All of these questions seem like insurmountable problems. After all, “solve climate change” is a pretty tall ask, especially for any one person. So how can we start to effect change? One of the most important things is to simply have the conversation and talk about climate change. Only 36% of American adults have that conversation at all. That number is likely higher among scientists, but surveys and polls have shown that simply talking about climate change is the third most influential factor on Americans’ opinions on the subject. Climate scientists have started speaking out in public fora. As astronomers, we have a degree of trust and credibility that even climate scientists don’t have, so we need to talk about the problem.

We also need to make climate change a local problem. As Dr. Roop pointed out, most people on the east coast probably don’t care about what will happen in Seattle. They care about what will happen in their own communities, so we need to make the effects of climate change clearly local and personal. Polls indicate that most people want action on climate change and at all levels of government — so talk to your elected officials about climate-friendly policies and hold them accountable.

One of Dr. Roop’s slides

How do we talk about climate change in a way that people will listen to? Dr. Roop recommended sticking to five simple and sticky themes: it’s real, it’s us, experts agree, it’s bad, and (importantly) there’s hope. Good messages must have emotional appeal and make the facts specific and personal to whoever you are talking to. In the era of the internet and free information, though, misinformation is a serious concern and sometimes also feels like an insurmountable problem. But people do not like being lied to or deceived, so a way to combat misinformation is to teach people the manipulative tactics used to spread it so they will be more on-guard. Finally, simply touting prospects of doom and gloom is not a good way to inspire people to act, so find local stories of hope and action — and spread them. The facts of climate change will follow the stories.

So how do we solve climate change? We teach. We talk. We listen. We learn. And most importantly, we act.

Plenary Talk: Lancelot M. Berkeley Prize: The XENON Project: at the Forefront of Dark Matter Direct Detection (by Nora Shipp)

Elena Aprile (Columbia University) gave the final plenary of the meeting. She presented the Lancelot M. Berkeley Prize Lecture on the search for dark matter with the XENON Project. The XENON Project is an experiment for the direct detection of Weakly Interacting Massive Particles (WIMPs). Aprile reminded us that the WIMP is only one of many classes of dark matter particle, but it is one that fits very nicely into physical theories. XENON is one of several experiments that has pushed down the limits on WIMP models in recent years, limiting the possible range of masses and cross-sections, and narrowing down the range of possible dark-matter particles.

The XENON experiment underground [XENON collaboration]

The background limits on the WIMP dark matter cross section from the XENON1T experiment so far. [Aprile et al. 2017]

The latest version of the XENON experiment is XENON1T, with 1.3 tons of cryogenically-cooled liquid xenon, and a smaller background than any previous experiment. It has placed the strongest limits to date on the WIMP scattering cross-section (for spin-independent interactions and a WIMP mass above 6 GeV). This is not the limit of the XENON project, however. Aprile presented plans for XENONnT, an even more powerful detector, with 8.4 tons of liquid xenon, which should improve the sensitivity to dark matter models by another order of magnitude in the coming years.

1 Comment

Pingback: AAS 233: Day four – New